On Friday 11th of February Tom of the BEHAVE-group presented a poster on his paper “Why general moral values cannot predict moral behavior in daily life” -co-authored by Maarten Kroesen and Caspar Chorus- at the Conference of the Society for Judgment and Decision Making. The conference is a leading annual meeting in the field of judgment and decision making research, attracting scholars from all parts of the world. Here, Tom presented a conceptual and exploratory empirical study on the relationship between general moral values and specific moral behavior in daily life. The study concludes that general moral values are actually poor predictors of moral behavior and that the moral questionnaires that are usually used to measure peoples values –like the Moral Foundation Questionnaire- are too general to capture the context-sensitivity of moral decision making. This is a link to our poster. See for more information on our study the abstract below.

Abstract

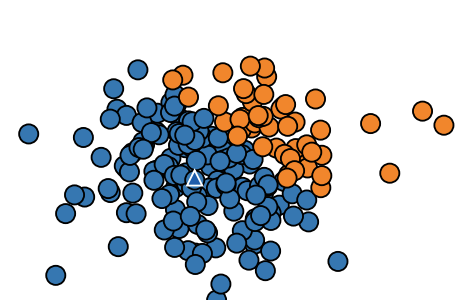

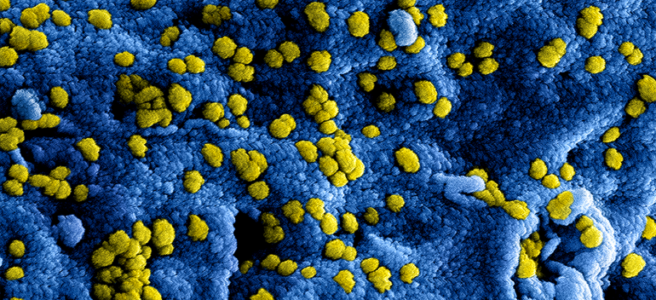

Throughout the behavioral sciences it is routinely assumed that general, basic moral values have a considerable influence on people’s behavior in daily life. Despite that this assumption is a key underpinning of major strands of research, a solid theoretical and empirical foundation for this claim is lacking. In this study, we explore this relationship between general moral values and daily life behavior through a conceptual analysis and an empirical study. Our conceptual analysis of the moral value-moral behavior relationship suggests that the effect of a generally endorsed moral value on moral behavior is highly context-dependent. It requires the materialization of several phases of moral decision making, each influenced by many contextual factors. We expect that this renders the relationship between generic moral values and people’s specific moral behavior indeterminate. Subsequently, we empirically explore this relationship in three different studies. We relate two different measures of general moral values -the Moral Foundation Questionnaire and the Morality as a Compass Questionnaire- to a broad set of self-reported morally relevant daily life behaviors (including adherence to Covid-19 measures and participation in voluntary work). Our empirical results are in line with the expectations derived from our conceptual analysis: the considered general moral values show to be rather poor predictors of the selected daily life behaviors. Furthermore, our results show that the moral values that we tailored to the specific context of the behavior became somewhat stronger predictors. Together with the insights derived from our conceptual analysis, this indicates the relevance of the contextual nature of moral decision making as a possible explanation for the poor predictive value of general moral values. Accordingly, our conceptual and empirical findings suggest that the implicit assumption that general moral values are predictive of specific moral behavior -a key underpinning of empirical moral theories such as Moral Foundation Theory- lacks foundation and may need revision.